Research

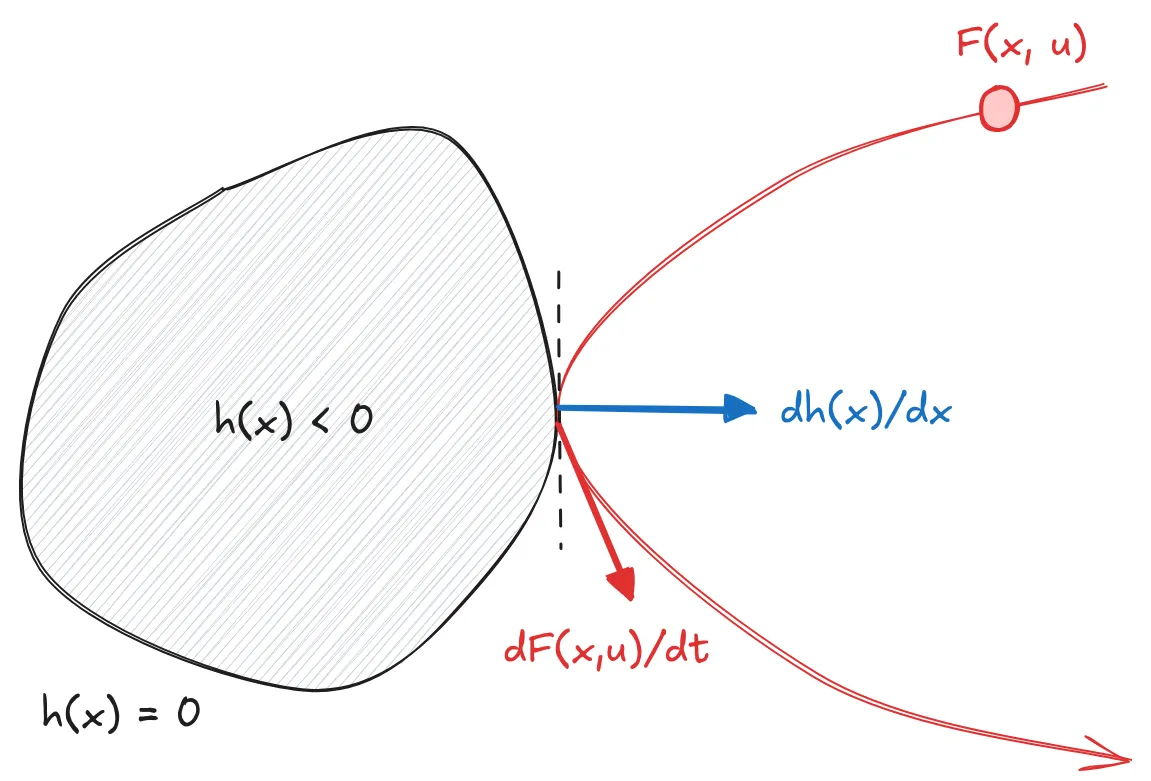

I worked with Group 76 (Controls and Autonomous Systems) at MIT Lincoln Labs on control barrier functions (CBFs) for dynamic obstacle avoidance in ground robots. CBFs are a somewhat recent technique for controlling dynamical systems that, under some conditions, can provide mathematical guarantees that the system will not enter undesirable states. The core idea is shown in the image: for a dynamical system changing according to F(x,u), if the system can be controlled such that on the boundary of the undesirable set (where h(x) = 0), the direction of motion and the gradient of h(x) are aligned, then the system is provably safe, and h(x) is called a CBF.

For realistic systems, actually getting such a guarantee is easier said than done. Uncertainty in the system dynamics and control limits (where u is restricted) pose challenges. My work revolved around construction CBFs for Ackermann drive ground robots based on LiDAR data. I worked largely in NVIDIA Isaac Sim and implemented CBFs using JAX for automatic differentiation. Because construction h(x) using classical techniques was particularly challenging for our system, I turned to neural CBF techniques that learn CBFs from data, as described in “How to Train Your Neural Control Barrier Function.” I used a Graph Neural Network trained on synthetic LiDAR data I collected from Isaac Sim to learn h(x).

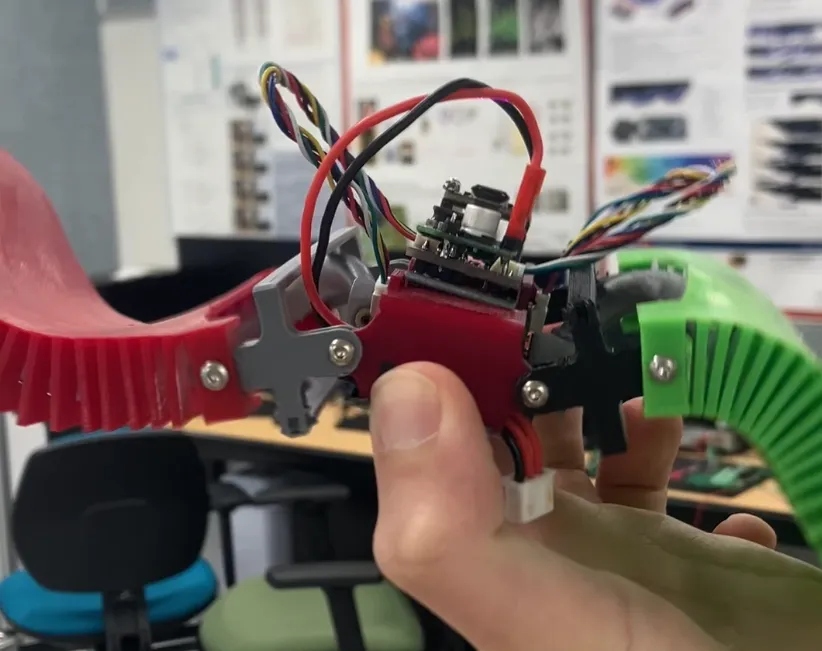

I work on the Single-Actuator Wave (SAW) robot project at CEI. The core idea behind our robots is that they can use their wave-shaped bodies to modify terrain, including building mounds, digging holes, and burrowing. As the name suggests, each wave is driven by just a single motor using a central helix.

My work is mostly about getting two or more of these robots to collaborate, as they are much more successful at their task when they climb on top of each other or use their peers as solid platforms. To this end, this semester, I have mostly been working on embedded signal processing to passively infer the state of another robot (such as the frequency of its waves or its location) using just a microphone. I’ve enjoyed working around the constraints of an embedded environment and have learned a lot about signals and microcontrollers. For example, I’ve had to write my own custom fixed-point FFT to get reasonable performance out of our Cortex-M0+, which doesn’t have an FPU. I’ve also touched on some PCB design, for example to integrate the microphone amplifier into the main robot PCB.

During my time at the AIRLab, I worked on urgency-aware navigation for hospital robots. Hospitals, especially ERs, can be chaotic environments and difficult for robots to navigate. Furthermore, many tasks in the ER are time-critical; a robot failing to navigate properly and blocking doctors and nurses can cost lives, even if the robot’s task is not critical. To help solve this problem, I worked on software packages that aid in “urgency-aware” navigation, where the robot is able to effectively determine the urgency of people around it and give way where necessary. I built modules that tracked people in 3D space, determined an urgency score based on their motion, and planned paths around static obstacles and humans.